Follow up post to the Build a material graph system from scratch.

This time I've added the translucent pass, misc optimizations and some refactorings in my mini Vulkan engine.

The translucent objects also interact with ray tracing shadow, TAA well. The performance is also much better than my previous version (3.x ms → 1.x ms).

In the following paragraph I'll explain what is done in this change ?.

*Disclaimer: This is just a personal practice of engine programming. Seeking something else if you're for game developing.

Git link: https://github.com/EasyJellySniper/Unheard-Engine

§Refactorings

Since the last time when material graph is added, I've applied material evaluation to the other passes as well. (Depth, motion, ray tracing hit group…etc)

Due to lack of “local rootsignature” design in the Vulkan, I came up of a global way to pass material data to hit group shader, which is in another post.

In brief, the system is partially bindless now. Mainly for textures and samplers, I created a large descriptor table for them so I can simply index it in the shader.

This also reduces the vkCmdBindDescriptorSets() calls for texture/sampler.

§Optimizations

Adding more funtionalities but having better performance than previous version. That's why optimizations are important!

Moving to compute shader

I move TAA / light pass shader to compute shader. They were originally rasterization shader and I did some 3x3 mask stuff in them.

Instead of sampling 9 times, I take the advantage of group shared memory in compute shader and sample the texture only once.

// global deinf

groupshared float3 GColorCache[UHTHREAD_GROUP2D_X * UHTHREAD_GROUP2D_Y];

// in some function

{

// cache the color sampling

float3 Result = SceneTexture.SampleLevel(LinearSampler, UV, 0).rgb;

GColorCache[GTid.x + GTid.y * UHTHREAD_GROUP2D_X] = Result;

GroupMemoryBarrierWithGroupSync();

UHUNROLL

for (int Idx = -1; Idx <= 1; Idx++)

{

UHUNROLL

for (int Jdx = -1; Jdx <= 1; Jdx++)

{

int2 Pos = min(int2(GTid.xy) + int2(Idx, Jdx), int2(UHTHREAD_GROUP2D_X - 1, UHTHREAD_GROUP2D_Y - 1));

Pos = max(Pos, 0);

float3 Color = GColorCache[Pos.x + Pos.y * UHTHREAD_GROUP2D_X];

// ...do something

}

}

}This saves ~0.08 ms for me @1080p. At higher resolution I bet it saves more. So I strongly recommend to go compute shader if you need mutiple sampling.

A small tip is to declare group memory as 1D array instead of 2D. I find 2D array fetching is slower.

More parallel tasks

UHE uses a scatter and gather method for heavy tasks. I only applied this to depth & base shader passes before. But now I spread more.

enum UHParallelTask

{

None = -1,

FrustumCullingTask,

SortingOpaqueTask,

DepthPassTask,

BasePassTask,

MotionOpaqueTask,

MotionTranslucentTask,

TranslucentPassTask

};The way I separated the task is striaght forward, all threads process different locations of a list. Consider a list with N renderers, and if I get 4 threads:

Thread 0: 0 - N/4

Thread 1: N/4 - 2N/4

Thread 2: 2N/4 - 3N/4

Thread 3: 3N/4 - End

This implies the sorting opaque won't be perfect anymore, as I divided sorting into N lists instead of sorting whole list. Since opaque object has depth information, the rendering result is still fine. I think this tradeoff is worthy.

Without parallel submission (or using only 1 thread), the tasks would take 3x times!

Bind minimum descrptors

Small but important improvement.

// before

for (const UHMeshRendererComponent* Renderer : OpaquesToRender)

{

// bind and drawing

std::vector<VkDescriptorSet> DescriptorSets = { Renderer->GetDescriptorSet(CurrentFrame)

, TextureTable.GetDescriptorSet(CurrentFrame)

, SamplerTable.GetDescriptorSet(CurrentFrame) };

BindDescriptorSet(Renderer->GetPipelineLayout(), DescriptorSets);

}

// after

std::vector<VkDescriptorSet> TextureSamplerSets = { TextureTable.GetDescriptorSet(CurrentFrame)

, SamplerTable.GetDescriptorSet(CurrentFrame) };

BindDescriptorSet(PipelineLayout, TextureSamplerSets);

for (const UHMeshRendererComponent* Renderer : OpaquesToRender)

{

// bind and drawing

BindDescriptorSet(Renderer->GetPipelineLayout(), Renderer->GetDescriptorSet(CurrentFrame));

}Since the texture and sampler descriptor table can be shared by all renderers, I shouldn't bind them in the for loop.

This can save ~0.5ms. In the future, I might consider moving system constant and material constant binding out of the renderers.

Since system constant can be shared too, and material constant can be bindless. It can be faster!

Per-vertex jitter offset

This optimization isn't for the performance. But to help reduce the artifact caused by TAA.

In previous version, I've only used motion vector rejection for dealing with disocclusion problem in TAA. (Refer this awesome article about TAA)

That is, when the motion difference in this frame and previous frame is larger than a threshold, do not sample history texture at all.

But the problem of it is: The threshold value is hard to define! Giving small value can not completely erase the disocclusion, giving large value could overkill the pixels that actually need anti-aliasing. (Especially between the pixel that has depth/no depth)

Then I combine the color clampping method, which introduces more flickering issue on some objects. But the color weighting doesn't seem to reduce flickering for me.

So I proposed a per-vertex jitter offset solution for this:

float4x4 GetDistanceScaledJitterMatrix(float Dist)

{

// calculate jitter offset based on the distance

// so the distant objects have lower jitter, use a square curve

float DistFactor = saturate(Dist * JitterScaleFactor);

DistFactor *= DistFactor;

float OffsetX = lerp(JitterOffsetX, JitterOffsetX * JitterScaleMin, DistFactor);

float OffsetY = lerp(JitterOffsetY, JitterOffsetY * JitterScaleMin, DistFactor);

float4x4 JitterMatrix =

{

1,0,0,0,

0,1,0,0,

0,0,1,0,

OffsetX,OffsetY,0,1

};

return JitterMatrix;

}

// in any vertex shader

{

// calculate jitter

float4x4 JitterMatrix = GetDistanceScaledJitterMatrix(length(WorldPos - UHCameraPos));

// pass through the vertex data

Vout.Position = mul(float4(WorldPos, 1.0f), UHViewProj_NonJittered);

Vout.Position = mul(Vout.Position, JitterMatrix);

}The idea is to scale down the jitter offset based on the distance to camera. So the flickering (which is more obvious on distant objects) can be improved.

If I want, I can even set per-object scaling value, not just the distance scaling. As a global solution, TAA isn't a perfect. So this definitely gives me more flexibilities. I have a way to deal with TAA problem locally.

§Translucent Pass

Finally, the most important change this time. For the translucent pass, I didn't do anything special at this point. It's a foward rendering, that evaluates the lighting at the same time.

I didn't do OIT, so the translucent renderer is sorted back-to-front.

Outputing motion vector and translucent depth

Without motion vector, TAA and other techniques that need this information would be a problem. Since translucent pass won't output depth value, I can't not convert it from depth buffer.

So there is a standalone translucent motion pass in my system. It will also output the translucent depth (use the same way as alpha test) value for latter use.

Although this only brings the motion vector of the frontmost translucent object, it works well for the most cases.

Raytracing shadow receiving

Okay! As in the demo video, when I switch the soil ground from masked cull mode to alpha blend, it can still receive the raytracing shadow. How do I do that?

I came up with 3 methods at the beginning:

(1) Utilize RayQuery feature in the pixel shader, shooting ray when it's rasterizing. But that means I need a multi-ray method to soften shadow and bring performance hit.

(2) Shooting a ray from camera to the world first If a translucent object is hit and it enters any hit shader, generate a second shadow ray. Then gather and blend all results I got.

Since I'm using a hybrid method for shadows, this method is compatible with my soft shadow pass and can get perfect result as the method 1. But still introduces a performance hit.

(3) Shooting a ray from translucent depth. The best performance method IMO despite it only generates shadow on the frontmost translucent object. I currently choose this way in UHE.

RaytracingAccelerationStructure TLAS : register(t1);

RWTexture2D<float2> Result : register(u2);

RWTexture2D<float2> TranslucentResult : register(u3);

// ... skipped a few defines

void TraceOpaqueShadow(uint2 PixelCoord, float2 ScreenUV, float Depth, float MipRate, float MipLevel)

{

// tracing ray from opaque depth surface

}

void TraceTranslucentShadow(uint2 PixelCoord, float2 ScreenUV, float OpaqueDepth, float MipRate, float MipLevel)

{

float TranslucentDepth = TranslucentDepthTexture.SampleLevel(PointSampler, ScreenUV, 0).r;

UHBRANCH

if (OpaqueDepth == TranslucentDepth)

{

// when opaque and translucent depth are equal, this pixel contains no translucent object, simply share the result from opaque and return

TranslucentResult[PixelCoord] = Result[PixelCoord];

return;

}

UHBRANCH

if (TranslucentDepth == 0.0f)

{

// early return if there is no translucent at all

return;

}

// ... doing always the same as opaque tracing, but it's just tracing from translucent depth surface

TranslucentResult[PixelCoord] = float2(MaxDist, Atten);

}

[shader("raygeneration")]

void RTShadowRayGen()

{

uint2 PixelCoord = DispatchRaysIndex().xy;

Result[PixelCoord] = 0;

TranslucentResult[PixelCoord] = 0;

// early return if no lights

UHBRANCH

if (UHNumDirLights == 0)

{

return;

}

// ... skipped

// trace for translucent objs after opaque, the second OpaqueDepth isn't wrong since it will be compared in translucent function

TraceOpaqueShadow(PixelCoord, ScreenUV, OpaqueDepth, MipRate, MipLevel);

TraceTranslucentShadow(PixelCoord, ScreenUV, OpaqueDepth, MipRate, MipLevel);

}The opaque and translucent result are stored in different render texture, so both opaque/translucent pass can get proper result without messing up each other.

The other differences:

(1) Early return when opaque and translucent depth value are equal. Simply uses the result from opaque.

Raytracing shadow casting

Another ray tracing problem for translucent to deal with. What should I do if it's hitting a translucent object?

IMO, translucent shadow should be fade out with the value of opacity. Lower opacity = less shadow, and vice versa.

Although this is not a perfect example (I should find a glass of water lol), the translucent shadow obviously follows the opacity and blends well with regular shadow.

The keypoint is carrying hit alpha information during hit process, and using it to blend the output. That is, I need to do something in the hit group shader.

// ... skipped other codes

[shader("closesthit")]

void RTDefaultClosestHit(inout UHDefaultPayload Payload, in Attribute Attr)

{

Payload.HitT = RayTCurrent();

#if !WITH_TRANSLUCENT

// set alpha to 1 if it's opaque

Payload.HitAlpha = 1.0f;

#endif

}

[shader("anyhit")]

void RTDefaultAnyHit(inout UHDefaultPayload Payload, in Attribute Attr)

{

// fetch material data and cutoff if it's alpha test

float2 UV0 = GetHitUV0(InstanceIndex(), PrimitiveIndex(), Attr);

float Cutoff;

UHMaterialInputs MaterialInput = GetMaterialInputSimple(UV0, Payload.MipLevel, Cutoff);

#if WITH_ALPHATEST

if (MaterialInput.Opacity < Cutoff)

{

// discard this hit if it's alpha testing

IgnoreHit();

return;

}

#endif

#if WITH_TRANSLUCENT

// for translucent object, evaludate the max hit alpha

// also carry the HitT and HitInstance data as the order of hit objects won't gurantee, I have to ignore the hit

Payload.HitT = RayTCurrent();

Payload.HitAlpha = max(MaterialInput.Opacity, Payload.HitAlpha);

IgnoreHit();

#endif

}When hitting opaque object:

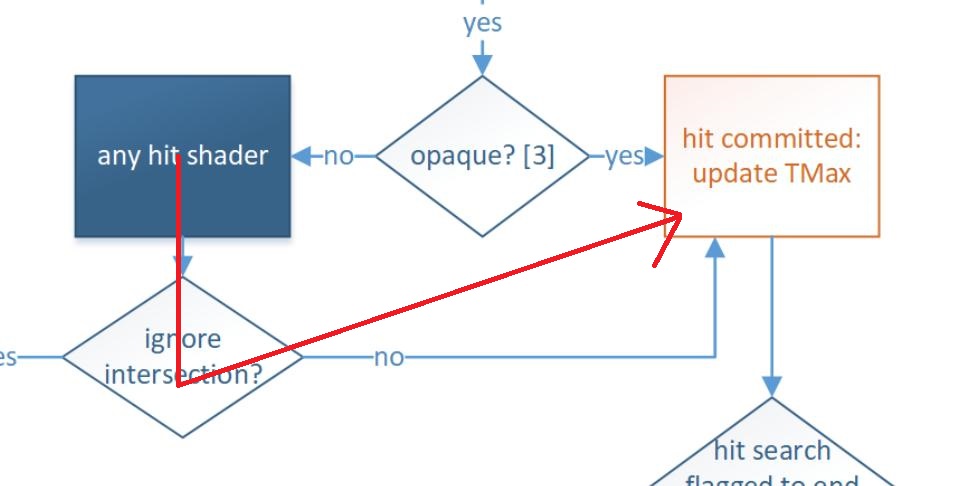

Regardless if it hit a translucent object before, when hitting a opaque set hit alpha to 1. With RAY_FLAG_ACCEPT_FIRST_HIT_AND_END_SEARCH, I don't need to worry about if the hit alpha is overriden by translucent.

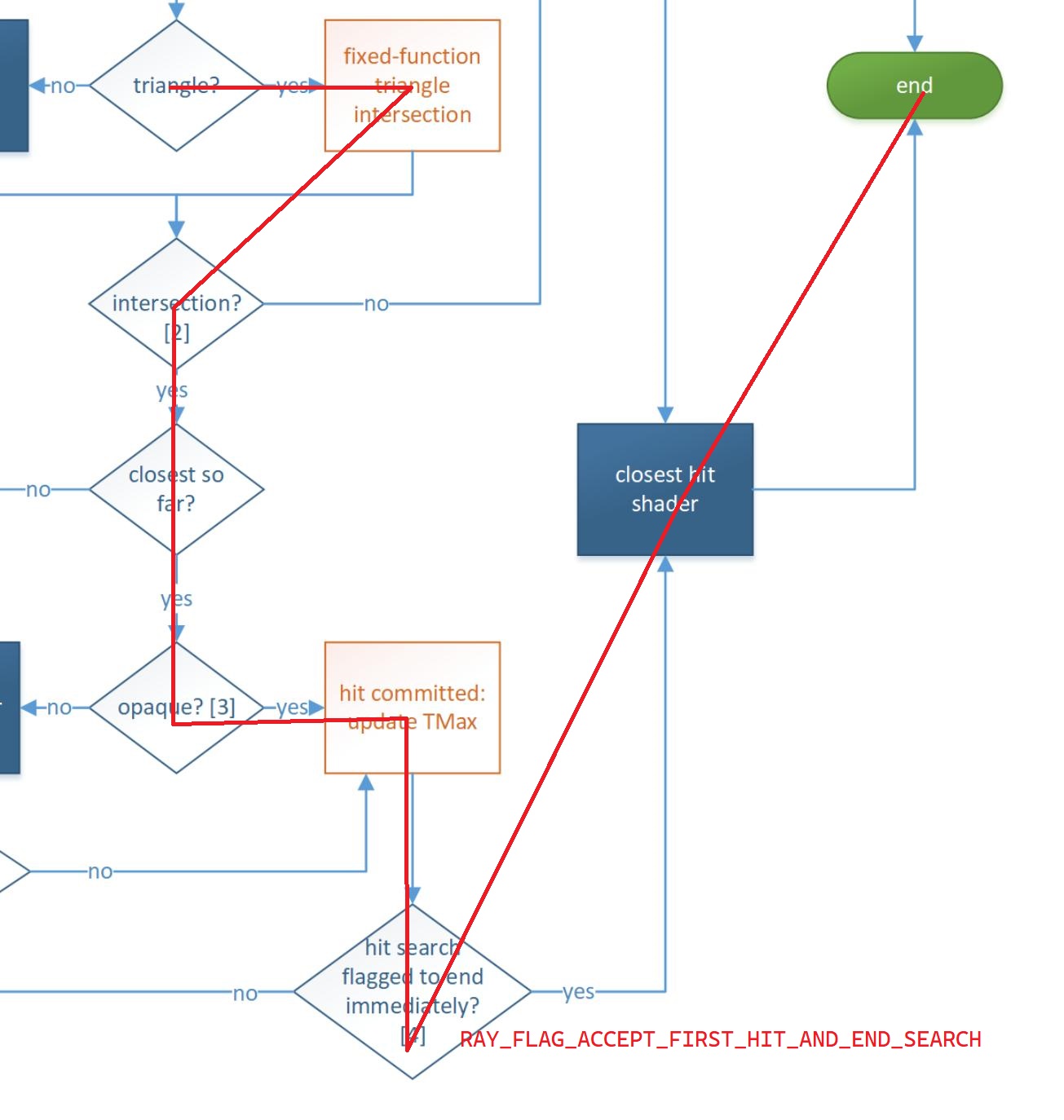

As for hitting translucent object, I can't call AcceptHitAndEndSearch() as ray needs to continue. But I can't just leave it otherwise this route gets executed:

That is, if you do nothing in the any hit shader, it sill updates the TMax. In such case, if there is an opaque candidate at further distance, it won't be traced at all.

So ignoring hit is the best move here. Just be sure to write the data to payload.

// output attenuation in the end

if (Payload.IsHit())

{

MaxDist = max(MaxDist, Payload.HitT);

Atten *= lerp(1.0f, DirLight.Color.a, Payload.HitAlpha);

}At last, blend the shadow strength with hit alpha and done the tracing!

§Future Works

Finally! I added the translucent pass in my system, dealt with related issues, and also improved the performance.

As for next, I think it's time to add point/spot light implementation. I won't do these with tranditional rasterization but with the compute shader light grid culling.

Will also consider adding editors for scene editing, make debugging easier.

And endless code refactoring, performance optimizations….